Background

Centuries ago, clockmakers started to add extra features to clocks and watches to attract buyers and enhance their reputation. They achieved these additional functions, called “complications”, through a combination of mechanical components. These components were completely predictable to the clockmaker who understood the whole system, but almost magical to the untrained eye.

The aviation industry is full of machines, systems, and processes, often so complicated that few, if any, can fully understand their inner workings. Unfortunately, as soon as we encounter external unknowns, such as people, weather, politics, or pandemics, our systems become complex. They form a socio-technical structure, where even a complete understanding of the entire system can’t guarantee an accurate prediction of the outcome of any particular sequence of events.

The Swiss Cheese Model

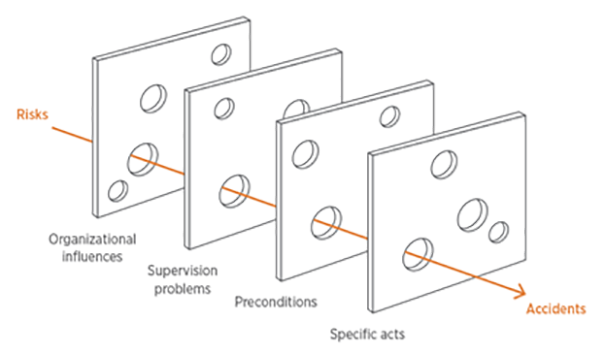

Where a sequence might lead to an adverse result, we need an understanding of the chain of events to interrupt the cause and prevent the accident. The “Swiss Cheese Model’ is an appropriate metaphor for this scenario, and arguably the most famous in the aviation world. James Reason first described the model (Human Error: Models and management, 2000) as a simplification of his “Organisational Accident Model”. Reason uses slices of cheese as a metaphor – each slice represents equipment, systems, processes, or even human performance, that contribute to the prevention of an accident or undesirable event.

The holes found in swiss cheese represent gaps or weaknesses in systems, processes, or even limits to human performance. The implication of the swiss cheese metaphor is that by identifying a sequence of events that can lead to an accident, identifying the weaknesses in any safety barriers, and mitigating them (moving the holes in the cheese) accidents can be avoided. One should keep in mind that in a complex system an accident sequence may not be as predictable as Reason’s metaphor assumes.

Our brain can be our worst ally

When we come across a new situation, our brain looks for similarities with previous events and, where possible, picks a previously learned response or even a context. Occasionally, while broadly similar to previous experience, a situation might require a different response, but your brain simply picks the best fit. Just think of all those hire cars you’ve seen using their wipers as they approach a junction then indicating, even on a dry day.

One of the consequences of the way our brain works is our habit of predicting a result based on past experience, and to match how we interpret the situation with our brain’s prediction. So unless events move a long way from our expectation, our brain will fix on those moments that align with our mental model and try and ignore or explain away anything that disagrees with it. This is called “confirmation bias” – we tend to see what we expect to see.

The problem is that it’s hard to recognise when we’re not seeing the whole picture. Our brain is hardwired to be lazy and take a shortcut to a known solution. When we see a result repeated again and again from a broadly similar starting position, we assume we’ve identified and understood the process – we’ve mastered the “complication” and can be sure of the outcome.

Confirmation bias can lead to catastrophe

It means that we’re naturally very poor at dealing with complex, low probability events. Those events where there is a chance of failure, but a series of past successes have told us otherwise, so it’s hard to believe a failure might happen.

Take the space shuttle Challenger disaster as an example. The technicians were sure from previous testing that the outside air temperature was too cold for the effective operation of the booster o-ring seals. And they were right. But during previous flights, they’d carried out operations close to those temperatures without any trouble. Despite empirical evidence from testing managers, they convinced themselves that a failure wouldn’t happen on this occasion. However, 7 Astronauts died.

Many safety awareness courses use the “swiss cheese model” as the accident model on which safety management is built, and many of those are based on the metaphor rather than the underlying theories of Reason’s “Organisational Accident Model”. The problem with this is that many non-safety professionals look at accident prevention through the lens of the metaphor rather than the theory – they believe barriers to be effective or ineffective, and view an accident sequence backwards from the outcome as an inevitable singular chain of events.

Our inability to imagine low probability events means that a period of accident free operations can be seen as cast iron proof of an effective system. Or even worse, reason to start arbitrarily removing barriers (naturally the most expensive ones).

Always keep human error front of mind

It’s essential to understand human error and plan for the human in the system approach when developing the policies, processes and procedures focused on delivering safe operations within a regulatory framework. It’s also important that, once businesses have put in place these processes, procedures, and risk controls, they regularly monitor them for residual signs of risk and check they’re still working as intended. Which is why an Internal Evaluation Program (IEP) is a critical safety assurance component of an integrated Safety Management System.

It’s equally vital that decision makers, post-holders and accountable managers are aware of their own limitations and the human factors that affect decision making. Complex systems are not necessarily predictable – our own minds coax us toward what we know, and can sabotage us from properly assessing low probability risks.

Aviation safety, however, is all about managing these complex, low probability high severity risks. Which means we humans are exposed. Regular safety leadership training is essential to ensure that leaders understand the threats to effective decision making that exist within their own psyche. And more importantly, have the skill, confidence, and self-awareness to manage safely.

This article is part of our Flight Plan series. You can download the latest edition in full via the button below: